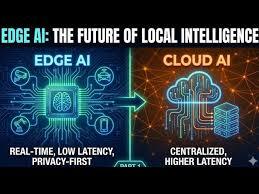

The 2026 Shift: In 2024, we sent everything to the cloud. In 2026, we realize that “The Cloud” is expensive, slow for real-time tasks, and a major privacy risk. The solution? Edge AI—processing data directly on your local hardware, laptops, and IoT devices.

Table of Contents

- What is Edge AI? (The ‘Local Brain’ Explained)

- The Financial Case: Cutting the ‘Cloud Tax’

- The Compliance Case: Solving the DPDP Headache

- Hardware Spotlight: NPUs and the Rise of AI PCs

- How to Transition: The Eduglar Hybrid-Edge Strategy

<a name=”what-is-edge”></a>

1. What is Edge AI? (The ‘Local Brain’ Explained)

Traditionally, when you use AI (like a chatbot or image scanner), your data travels to a giant data center, gets processed, and comes back. This causes latency (delay).

Edge AI moves that “inference” process to the device itself—whether it’s a smart camera in your warehouse, a medical device in a hospital, or the laptop on your desk.

- Latency in 2026: For autonomous systems or industrial robots, waiting 500ms for a cloud response is too long. Edge AI responds in under 10ms.

- Reliability: If your internet goes down, your AI keeps working. It is “Offline-First” by design.

<a name=”financial-case”></a>

2. The Financial Case: Cutting the ‘Cloud Tax’

By February 2026, “Token Burn” has become a major line item in corporate budgets.

- Bandwidth Savings: Sending high-definition video feeds to the cloud for AI analysis is incredibly expensive in terms of data costs. Edge AI analyzes the video locally and only sends a tiny text alert when it sees an anomaly.

- Scalability without Subscriptions: Once you own the hardware (Edge devices), your “per-task” cost drops to nearly zero. You aren’t paying a monthly fee to an AI provider for every single interaction.

- The ROI: Companies shifting to Edge AI in 2026 report a 30% to 50% reduction in cloud operational costs.

<a name=”compliance”></a>

3. The Compliance Case: Solving the DPDP Headache

As we discussed in our last blog, India’s DPDP Act makes data transfer a risky business.

- Data Sovereignty: With Edge AI, personal data never leaves the device. * Private-by-Design: If you are a healthcare provider or a bank, processing sensitive biometric data locally means you don’t have to worry about “data-in-transit” breaches or cross-border transfer restrictions.

- The Verdict: Edge AI is the “Easy Button” for data privacy compliance in 2026.

<a name=”hardware”></a>

4. Hardware Spotlight: NPUs and the ‘AI PC’

In 2026, you aren’t just buying a “laptop”; you’re buying an AI PC equipped with a Neural Processing Unit (NPU).

- What is an NPU? It’s a specialized chip designed specifically to run AI models while consuming 10x less power than a traditional processor.

- Why it matters for your budget: These chips allow your employees to run “Small Language Models” (SLMs) locally for writing, coding, and data analysis without needing a $20/month subscription to a cloud AI.

<a name=”transition”></a>

5. How to Transition: The Eduglar Hybrid-Edge Strategy

You don’t need to abandon the cloud entirely. Most successful 2026 firms use a Hybrid-Edge model:

- Edge: Handles real-time tasks, privacy-sensitive data, and routine automation.

- Cloud: Handles heavy model training, long-term data archiving, and “Big Picture” analytics.

How Eduglar helps you switch:

- Hardware Refresh: We help you identify which of your current assets can be upgraded with Edge-capable hardware.

- Local Model Deployment: We install and optimize “Small Language Models” (like Llama 3-8B or Mistral) on your private servers.

- Infrastructure Security: We harden your Edge nodes so your local “brains” are protected from physical and digital tampering.

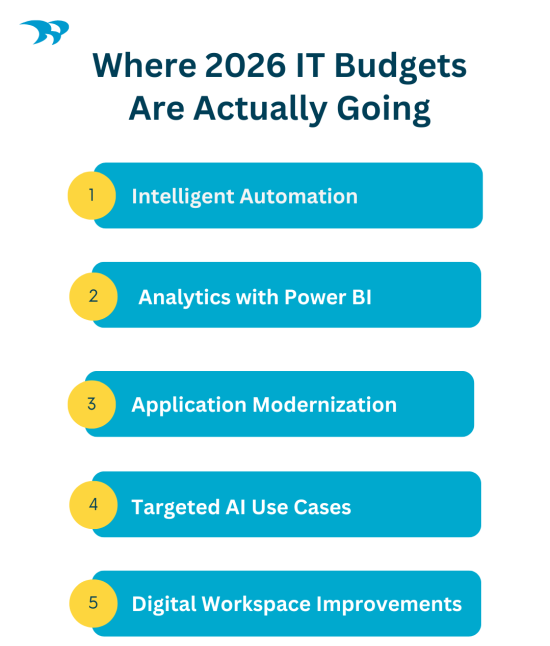

Stop paying the “Cloud Tax.” Start owning your intelligence.[Talk to our Infrastructure Team about Edge AI] | [Download the AI Hardware Buyer’s Guide 2026]